Khmelnitsky test: performing the

test requires some attention, as the score displayed using the 'info' key,

after interrupting the search, is sometimes inappropriate. I use the

infinite level (B8) and I stop the search exactly after three minutes

thinking time (or one minute in some situations); and sometimes the

score is then re-computed on the fly, leveraging a single ply, instant

search. It is thus mandatory to enable the display evaluation option

while the computer is thinking, and to use the value that was displayed just before stopping the search.

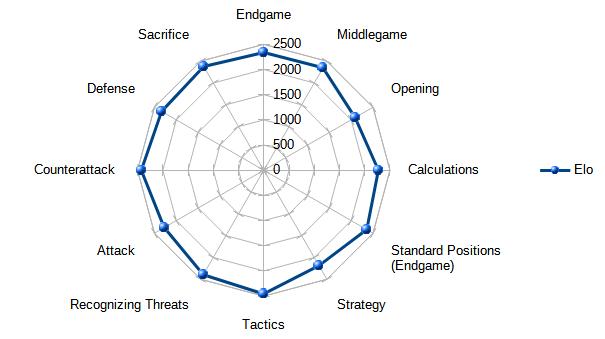

As expected, the Mephisto Chess Challenger's

profile is close to the one of the GK2000 from the same

author, powered by the same processor; except for roughly

hundred more points (the 32Kb ROM enabled the program to be enhanced,

compared to only 16Kb for the GK2000). The main strengths remain

tactics, recognizing threats, and counterattacks. Nothing better as far

as endgame is concerned, on another hand the algorithms have been

significantly reworked in order to strengthen the middlegame play. The Chess Challenger is particularly

comfortable in intricate positions actually featuring a lot

of pieces, where its tactical skills rule. In return, still

comparing to the GK2000, the level of play in the opening lowered

noticeably

(meaning playing out of book, when most pieces are present but

not still whith much complex tactics). A human player at same average

level can leverage significantly better attacking skills, but would

wisely keep the game simple and play cautiously until the endgame is

reached.

As a conclusion, I point out the H8 bug affected the

test in 4 out of 100 positions.

Year: 2004

Year: 2004

Year: 2004

Year: 2004

Year: 2004

Year: 2004

Year: 1992

Year: 1992

Year: 2016

(prototype), 2021 (this device)

Year: 2016

(prototype), 2021 (this device)

Year: 2016

Year: 2016

Year: 2023

Year: 2023

Year: 2019

Year: 2019

Year:

2019

Year:

2019